As Product Managers, while working on a product, we have multiple ideas - some are big while some are small changes. Some are intuitive, while some are data backed. In such scenario, how do we make sure that we don’t take a decision that affects the product in a negative way?

The answer is experimentation and data backed decisions, and A/B testing is a powerful tool that helps us do this. How? Let’s talk about it in depth.

Making Bad Decisions

While building a product, you are always trying to either build the best new feature or improve an existing feature that will move the metrics. The keyword here is “improve”. We want metrics to move, but in a direction that it helps the overall goal of the company.

If your company or product is in a growth stage, you actively do a lot of small changes in the app in order to increase retention, decrease churn, improve the signup conversion etc.

But, how do you think of these small changes? And more importantly, how do you know that this change will definitely help you move the metric. How do you know for sure? Well, you can’t.

Now, let’s imagine a scenario.

Let’s say you are a Product Manager at an e-commerce company and the Head of Product comes to you and says that we need to decrease the size of the purchase button on the checkout page. Now, if you are naive enough, you might think that “yeah, we can do that” and get the engineers to implement the change for all the users.

After a week, realise that the conversion numbers for checkout page has decreased by 2%. After another week, you realise that the conversion numbers have decreased by 5%. Now, it’s something to worry about.

You do the RCA (Root Cause Analysis) and find out that everything else is working fine and the only change implemented was the button size. You quickly go back to the previous button size and the numbers become normal again. Everyone is happy.

But, is it ideal? Is such experimentation for a product with millions of users right? What about the lost revenue and users because of this small 2 weeks “experiment”?

This was a bad decision.

Now, let’s imagine another scenario where the product manager is not naive.

You are a Product Manager at an e-commerce company and the Head of Product comes to you and says that we need to decrease the size of the purchase button on the checkout page. Because you are not naive, the first thing you ask is “Why?”

You get this reply: “We believe that decreasing the size of the button will increase the conversion on the checkout page by 5% in the next 2 weeks”

The statement above is called a hypothesis. A product hypothesis is an assumption made within a limited understanding of a specific product-related situation. It further needs validation to determine if the assumption would actually deliver the predicted results or add little to no value to the product.

Now, let’s say you agree with the hypothesis but because it’s a hypothesis, we can’t be sure. We have to test it.

How should we test this hypothesis?

Should we just make the change and see what happens? No, because we are not naive or stupid.

What if we only show the changes to a small number of people, like 5% of the people and observe them separately for the next 2 weeks. In this way, we will be able to observe how the users react to the change and not affect the numbers a lot.

Sounds smart right? Maybe it is.

If we do this, over the next 2 weeks, we can observe the conversion rate for “normal users” i.e. the 95% base and compare it with the conversion rate of the “special users” i.e. the random 5% selected for this test.

If the 5% are showing the results as expected - it means that we have validated the hypothesis.

If not, we will say that we have failed to validate the hypothesis, hence can’t do the change.

So, the overall metrics for the product are not affected by a lot and we complete our experiment too. Pretty simple, right?

If you understand everything above, you are ready to understand A/B Testig.

What is A/B Testing?

A/B testing, or ‘split-testing’ is a method in which you split your audience in two or more groups to test a feature, a change, or a component. This split can be done on a 50-50 basis or any other ratio depending on the sample size of the audience.

These two groups receive two different versions of the same feature. It could be something as small as the size of a button, colour of a button or even something big like reordering the onboarding flow of the product completely.

After a certain amount of time, the results for both the groups are observed and based on the results, it is decided that which feature will be rolled out to the entire user base. With every A/B test, there is a metric attached that we want to improve.

Let’s take an example: Duolingo

Several years ago, Duolingo began tackling perhaps the most existential question for an app startup: what was causing the leak at the top of their funnel — and how could they stop it?

Duolingo was seeing a huge drop-off between the number of people who were downloading the app and signing up. And it's obviously really important for people to sign up — it suggests they're going to come back and, second, signals the opportunity to turn one visit into a rewarding, continuous experience.

The team at Duolingo thought that the fix might be counterintuitive: While it seems like you should ask users to sign up immediately, when you have the greatest number of interested users and their attention, perhaps letting people sample your product first is the most powerful pitch.

This was a hypothesis.

The Tests:

The first test they ran for this was to simply move the signup screen back a few steps for some users.

The results - Simply moving the sign-up screen back a few steps led to about a 20% increase in DAUs.

The first test had made a significant impact, so they decided refine the test and run it again. They asked themselves: “When should they ask users to sign up? In the middle of a lesson? At the end? And how should they do it?”

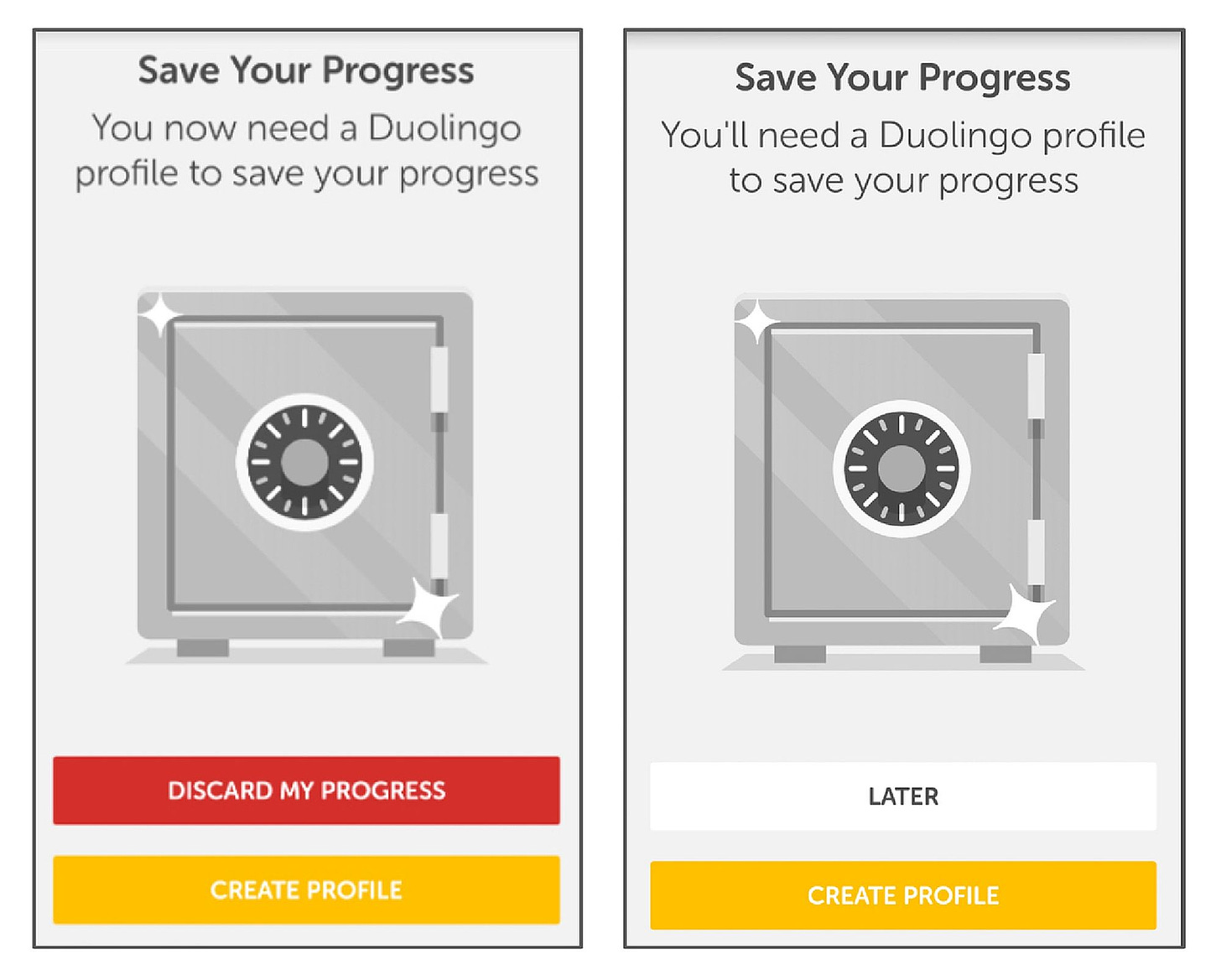

On the page that they were showing to the user, there was a big red button at the bottom of the screen that said ‘Discard my progress’ which basically meaning ‘Don’t sign up.’

They suspected that many people were conditioned to simply hit the most prominent button on a screen without thinking about it — and that Duolingo was losing still-interested users. So, they swapped that design for a subtle button that simply read “Later”.

That change introduced “soft walls” — that is, optional pages that ask users to sign up, but allow them to keep going by hitting “Later” — and opened the door to a new arena for experimentation.

And then, they did an A/B Test with this change and came to the conclusion that it works. Together, these subsequent changes to delayed sign-up, optimizing hard and soft walls yielded an 8.2% increase in DAUs.

Another Example: Netflix

Netflix is well known for being a great A/B testing playground! In fact, every product change Netflix considers goes through a rigorous A/B testing process before becoming the default user experience. You might notice that your own feed is constantly running experiments.

For example, you may have noticed that titles of the movies and shows are displayed with different images at different times. One day it’s Captain America in the preview for The Avengers, and the next it’s Black Widow. By experimenting with these changes, the algorithm is figuring out which character images are most likely to make users click.

Learn Product Management with Crework

Before we continue with your learning, lemme ask you something.

Are you an aspiring Product Manager? Do you want to transition to a product role in the coming months?

If you are just getting started with learning Product Management, you might want to explore our Product Management Cohort - A 12 weeks program to help you learn how to think like a PM and get your first product job.

How to conduct an A/B Test?

The process of conducting an A/B test is pretty simple.

Step 1: Define your goal

Step 2: Look at your current data

Step 3: Come up with a hypothesis

Step 4: Perform A/B Test and analyse the results

Step 5: Take action and repeat with something else

Now, let’s look at an example:

You are working at an e-commerce company and you want to increase the clicks on the “Buy” button. Now, as a PM, you are supposed to come up with an idea.

Step 1: Define your goal - Increase the clicks on the buy button on product pages

Step 2: Look at your current data - You go through the data and find that current click rate on product page is 20% but the buy button is too small for the user to notice.

Step 3: Come up with a hypothesis - “Making the CTA button more prominent (bigger) will drive 20% more clicks”

Step 4: Perform A/B Test - You increase the size of the buy button only for 20% of your users (test group) and let it be the same for 80% of the users (control group). Then you observe the numbers over the next 1 week.

After 1 week, you found that for the test group, the click rate was 21%, while it was the same 20% for the control group

Although the click rate is higher, but the change is only 5%, way below our hypothesis, so we won’t implement the change.

Coming up with and framing the Hypothesis

Not having a hypothesis is like throwing spaghetti at a wall and see what sticks. Hypothesis are important as it protects us from our own biases.

A hypothesis could be framed in this way:

Based on [evidence], we believe that if we change [your change] for [customer segment], it witll help them [impact]. We will know this is true if we see [your expected change] in [primary metric]. This is good for business because an increase in [primary metric] means an increase in [Business KPI].

Things you should take care of while implementing A/B Tests

-

Always test comparable things, one at a time

While conducting A/B Tests, make sure you are not implementing more than one changes at a time. Why? Because if you do multiple changes, you won’t be able to attribute the change in metrics to a specific change. If you change the size of the button and the text of the button too, you wouldn’t know if the change in the click percentage is because of the text or the button size.

Hence, we only do one change at a time with comparable things.

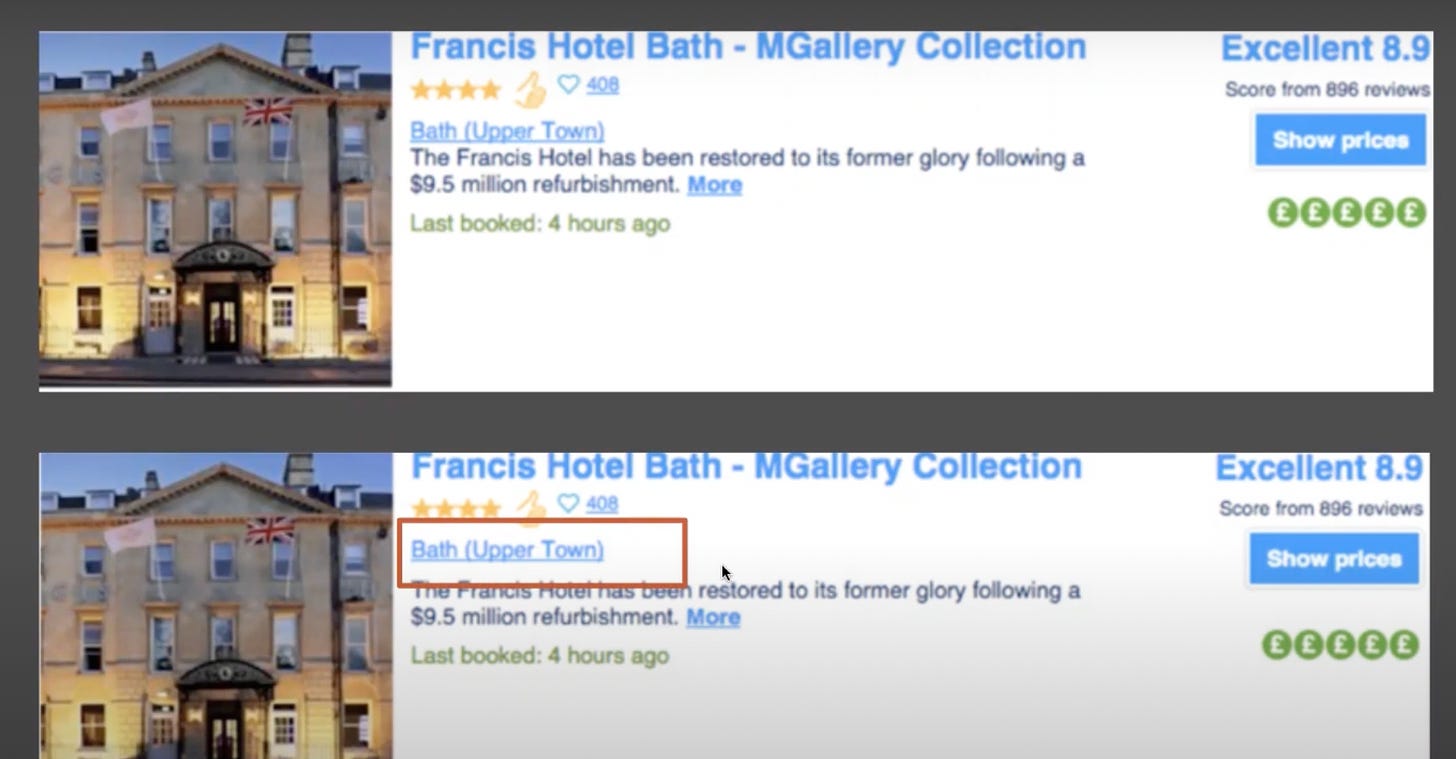

Example:

Booking.com, a famous website to book hotels ran this small A/B test where they increased the spacing (marked in red above). This single changed brought better conversion from this page.

-

Make sure you know the primary metric of your experiment

A primary metric for an experiment is the one which needs to be conclusive in order to accept that there is a statistical significance in your experiment.

For example, if you increase the size of the button, it can affect metrics like conversion rate, time spent on the page etc. But the only metrics that matter is the conversion rate. If the test shows that the change in button size increased time spent by 20% but doesn’t improve the conversion, then the test has failed. Because the primary metric for the test is conversion, which hasn’t moved.

Only the primary metric can tell you conclusively if your test has a positive or a negative impact.

-

Make sure your experiment is not impacting the health metrics

Every product has a set of metrics that signify the health of the service you deliver, which means that the product is being used by the users easily and efficiently without any inconvenience. Examples are: Website performance/ speed, Backend errors,

Whenever you run an A/B Test, you have to make sure that running that test doesn’t impact those health metrics in any way.

-

Make sure that none of those tests conflict with one another

At any point of time, there are multiple A/B tests and experiments running on a product. Your job is to make sure that before you run one such test, you are sure that it will not get in the way of some other test.

-

How long should a test run?

The answer to this question depends on 2 things:

- How much traffic do you need?

- What is the minimum change do you need to detect?

Ideally, try to run the test for 1 or 2 full week cycles so that you capture a diverse audience. It also helps you get more traffic.

Try to run the test on as much traffic as possible. You can run the test on lower traffic too but the minimum detectable change you want to observe must be higher. Example, if making a change to decrease customer support ticket, when you have low traffic, keep the minimal required change as 10%.

Why do Product Managers need A/B Testing?

A/B testing is a crucial tool for product managers to drive success. Here's how and why it helps:

-

Data-driven decision making

With A/B tests, you get to replace, or even better, support your gut feelings with concrete evidence. Conducting experiments and tests reduce the risk of implementing ineffective changes

-

User behaviour insights

Running A/B tests reveal how users interact with different versions of a product hence providing new insights about how users feel. It also helps you understand user preferences and pain points.

-

Incremental improvements

A/B testing enables product managers to make small, iterative changes to their products. This approach allows for continuous optimisation of features and designs without the risk of major overhauls.

-

Validation of hypotheses

Product managers often have theories about what users want or how they might behave. A/B testing provides a scientific method to test these assumptions about user needs and preferences. This evidence-based approach helps you avoid investing resources in changes that sound good in theory but don't improve the user experience or business metrics in practice.

-

Personalisation opportunities

A/B testing can reveal that different user segments respond differently to various features or designs. This insight is invaluable for creating personalised experiences and enables you to tailor experiences for different user groups, potentially increasing engagement and satisfaction across your entire user base.

-

Cost-effectiveness

A/B testing helps prevent investment in features or designs that don't resonate with users. By testing ideas on a smaller scale before full implementation, you can avoid costly mistakes and allocate resources more efficiently.